Make Some Noisia

Dutch electronic megahouse Noisia has been rocking the planet with their latest album ‘Outer Edges’.

Photo by Diana Gheorghiu

It was a wait. But one that was truly worth it. Essentially a concept album, they pushed the boundaries on this one by backing it up with a ‘concept tour’.

An audio-visual phenomenon with rivetting content, perfect sync & melt-yo-face energy, the Outer Edges show is one that could not pass our dissection.

[fold][/fold]

We visited Rampage, one of the biggest Drum & Bass gigs around the world & caught up with Roy Gerritsen (Boompje Studio) & Manuel Rodrigues (DeepRED.tv), on video and lighting duty respectively, to talk to us about the levels of excellence the Noisia crew has achieved, with this concept show.

Here is a look at Diplodocus, a favorite amongst bass heads:

Video by Diana Gheorghiu

Thanks for doing this guys! Much appreciated.

What exactly is a concept show and how is preparation for it different from other shows?

When Noisia approached us they explained they wanted to combine the release of their next album “Outer Edges” with a synchronized audio visual performance. It had been 6 years since Noisia released a full album so you can imagine it was a big thing.

Together, we came up with a plan to lay the foundation for upcoming shows. We wanted to focus on developing a workflow and pipeline to create one balanced and synchronized experience.

Normally, all the different elements within a show (audio, light, visual, performance) focus on their own area. There is one general theme or concept and everything then comes together in the end - during rehearsals.

We really wanted to create a show where we could focus on the total picture. Develop a workflow where we could keep refining the show and push the concept in all different elements in a quick and effective way, without overlooking the details.

What was the main goal you set out to achieve as you planned the Outer Edges show?

How long did it take to come together, from start to end?

We wanted to create a show where everything is 100% synchronized and highly adaptable. Having one main control computer which connects to all elements within the show in a perfect synchronized way.This setup gave us the ability to find a perfect balance and narrative between sound, performance, lights and visuals. Besides that we wanted to have a modular and highly flexible show. Making it easy and quick to adapt or add new content.

We started with the project in March 2016 and our premiere was at the Let It Roll festival in Prague (July 2016).

The show is designed in such a way that it has an “open-end”. We record every show and because of the open infrastructure we are constantly refining it on all fronts.

What are the different gadgets and software you use to achieve that perfect sync between audio/video & lighting?

Roy:Back in the day, my graduation project at the HKU was a vj mix tool where I used the concept of “cue based” triggering. Instead of the widely used timecode synchronization where you premix all the content (the lights and the video tracks), we send a MIDI trigger of every beat and sound effect.This saves a lot of time in the content creation production process.

The edit and mix down of the visuals are basically done live on stage instead of on After effects. This means we don't have to render out 8 minute video clips and can focus on only a couple of key visual loops per track. (Every track consists of about 5 clips which get triggered directly from Ableton Live using a custom midi track).Inside Ableton we group a couple of extra designated clips so they all get triggered at the same time.

For every audio clip we sequence separate midi clips for the video and lighting, which get played perfectly in sync with the audio. These midi tracks then get sent to the VJ laptop and Manuel's lighting desk.

We understand you trigger clips off Resolume from Abelton Live using the extremely handy Max for Live patches?

Yes, we sequence a separate midi track for each audio track. We divided up the audio track in 5 different elements (beats, snares, melody , fx etc.), which corresponds with 5 video layers in Resolume.

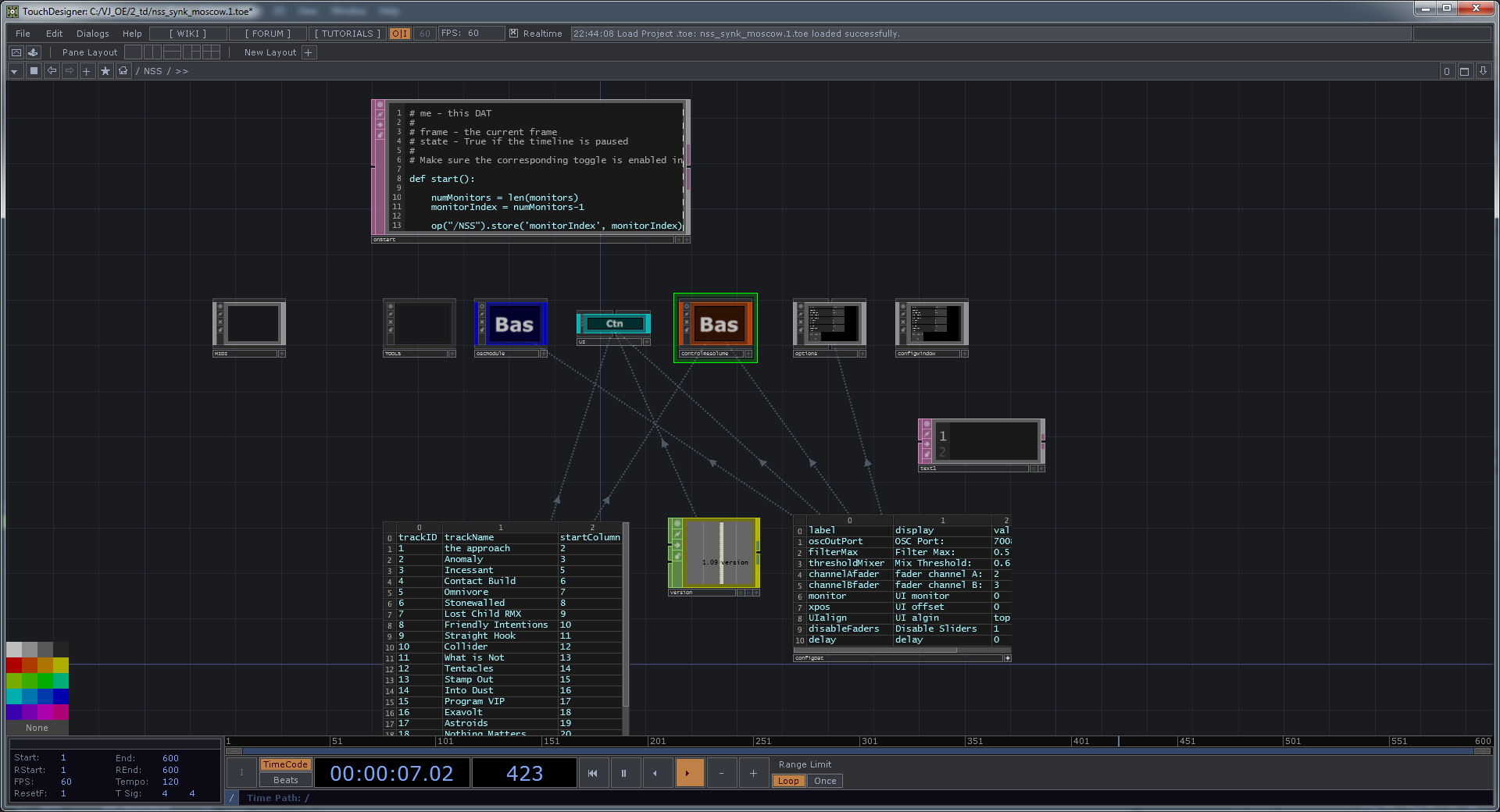

When a note gets triggered, a Max for Live patch translates it to an OSC message and sends if off to the VJ laptop. The OSC messages get caught by a small tool we built in Derivative’s TouchDesigner. In its essence this tool translates the incoming messages into OSC messages which Resolume understands. Basically, operating Resolume automatically with the triggers received from Ableton.

This way of triggering videoclips was a result of an experiment from Martin Boverhof and Sander Haakman during a performance at an art festival in Germany, a couple of years ago. Only two variables are being used- triggering video files and adjusting the opacity of a clip. We were amazed how powerful these two variables are.

Regarding lighting, we understand the newer Chamsys boards have inbuilt support for MIDI/ timecode. What desk do you use?

Manuel:To drive the lighting in the Noisia - Outer Edges show I use a Chamsys Lighting desk. It is a very open environment. You can send Midi, Midi showcontrol, OSC, Timecode LTC & MTC, UDP, Serial Data and off course DMX & Artnet to the desk.

The support of Chamsys is extremely good and the software version is 100% free. Compared to other lighting desk manufacturers, the hardware is much cheaper.

A lighting desk is still much more expensive than a midi controller.

It might look similar as both have faders and buttons but the difference is that a lighting desk has a brain.

You can store, recall and sequence states, something which is invaluable for a lighting designer and now is happening is videoland more and more.

I have been researching on bridging the gap between Ableton Live and ChamSys for 8 years.

This research has led me to M2Q, acronym of Music-to-Cue which acts as a bridge between Ableton live and ChamSys. M2Q is a hardware solution designed together with Lorenzo Fattori, an Italian lighting designer and engineer. M2Q listens to midi messages sent from Ableton Live and converts them to Chamsys Remote Control messages, providing cue triggering and playback intensity control.

M2Q is reliable, easy and fast lighting sync solution. It enables non linear lighting sync.

When using Timecode it is impossible to loop within a song, do the chorus one more time or alter the playback speed on the fly. One is basically limited to pressing play.

Because our lighting sync system is midi based the artist on stage has exactly the same freedom Ableton audio playback offers.

Do you link it to Resolume?

Chamsys has a personality file (head file) for Resolume and this enables driving Resolume as a media server from the lighting desk. I must confess that I’m am considering switching to Resolume now for some time as it is very cost effective and stable solution compared to other mediaserver platforms.

Video by Diana Gheorghiu

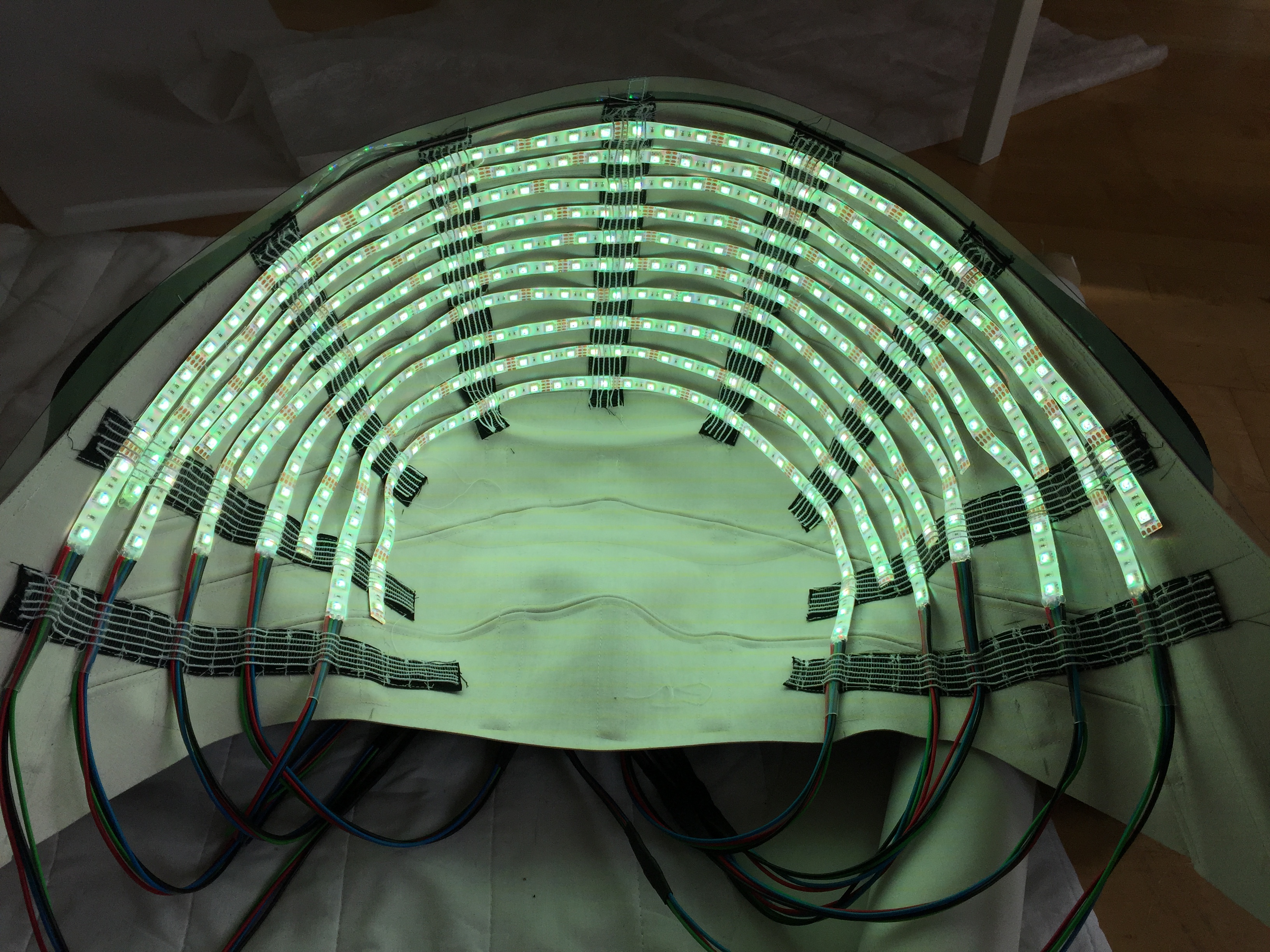

Tell us about the trio’s super cool headgear. They change color, strobe, are dimmable. How?!

The led suits are custom designed and built by Élodie Laurent and are basically 3 generic led parcans and have similar functionality.

They are connected to the lighting desk just as the rest of the lighting rig and are driven using the same system.

Fun fact: These are the only three lights we bring with us so the Outer Edges show is extremely tour-able.

The Noisia content is great in it’s quirkyness. Sometimes we see regular video clips, sometimes distorted human faces, sometimes exploding planets, mechanical animals- what’s the thought process behind the content you create? Is it track specific?

The main concept behind this show is that every track has his own little world in this Outer Edges universe. Every track stands on its own and has a different focus on style and narrative.

Nik (one third of Noisia & Art director) compiled a team of 10 international motion graphic artists and together we took on the visualization of the initial 34 audio tracks. Cover artwork, videoclips and general themes from the audio tracks formed the base for most of the tracks.

Photo by Diana Gheorghiu

Photo by Diana Gheorghiu

The lighting & video sync is so on point, we can’t stop talking about it. It must have taken hours of studio time & programming?

That was the whole idea behind the setup.

Instead of synchronizing everything in the light and video tracks, we separated the synchronizing process from the design process. Meaning that we sequence everything in Ableton and on the content side Resolume takes care of the rest. Updating a vj clip is just a matter of dragging a new clip into Resolume.

This also resulted in Resolume being a big part in the design process (instead of normally only serving as a media server).

During the design process we run the Ableton set and see how clips get triggered, if we don't like something we can easily replace the video clip with a new one or adjust for instance the scaling size inside Resolume.

Some tracks which included 3D rendered images took a bit longer, but there is one track “Diplodocus” which took 30 minutes to make from start to finish. Originally, meant as a placeholder but after seeing it being synchronized we liked the simplicity and boldness of it and decided to keep it in the show.

Here is some more madness that went down:

Video by Diana Gheorghiu

Is it challenging to adapt your concept show into different, extremely diverse festival setups? How do you output the video to LED setups that are not standard?

We mostly work with our rider setup consisting of a big LED screen in the back and LED banner in front of the booth, but in case of bigger festivals we can easily adjust the mapping setup inside Resolume.

In the case of Rampage we had another challenge to come up with a solution to operate with 7 full HD outputs.

Photo by Diana Gheorghiu

Normally Nik is controlling everything from stage and we have a direct video line to the LED processor. Since all the connections to the LED screens were located in the front of house we used 2 laptops positioned there.

It was easy to adjust the Ableton Max for Live patch to send the triggers to two computers instead of one, and we wrote a small extra tool that sends all the midi-controller data from the stage to the FOH (to make sure Nik was still able to operate everything from the stage).

Talk to us about some features of Resolume that you think are handy, and would advice people out there to explore.

Resolume was a big part of the design process in this show. Using it almost as a small little After Effects, we stacked up effects until we reached our preferred end result. We triggered scalings, rotations, effects and opacity using the full OSC control option Resolume offers. This makes it super easy to create spot on synchronized shows. With a minimal amount of pre - production.

This in combination with the really powerful mapping options makes it an ideal tool to build our shows on!

What a great interview, Roy & Manuel.

Thanks for giving us a behind-the-scenes understanding of what it takes to run this epic show, day after day.

Noisia has been ruling the Drum & Bass circuit, for a reason. Thumping, fresh & original music along with a remarkable show- what else do we want?

Here is one last video for a group rage :

Video by Diana Gheorghiu

Rinseout.

Credits:

Photo credits Noisa setup: Roy Gerritsen

Adhiraj, Refractor for the on point video edits.

Photo by Diana Gheorghiu

It was a wait. But one that was truly worth it. Essentially a concept album, they pushed the boundaries on this one by backing it up with a ‘concept tour’.

An audio-visual phenomenon with rivetting content, perfect sync & melt-yo-face energy, the Outer Edges show is one that could not pass our dissection.

[fold][/fold]

We visited Rampage, one of the biggest Drum & Bass gigs around the world & caught up with Roy Gerritsen (Boompje Studio) & Manuel Rodrigues (DeepRED.tv), on video and lighting duty respectively, to talk to us about the levels of excellence the Noisia crew has achieved, with this concept show.

Here is a look at Diplodocus, a favorite amongst bass heads:

Video by Diana Gheorghiu

Thanks for doing this guys! Much appreciated.

What exactly is a concept show and how is preparation for it different from other shows?

When Noisia approached us they explained they wanted to combine the release of their next album “Outer Edges” with a synchronized audio visual performance. It had been 6 years since Noisia released a full album so you can imagine it was a big thing.

Together, we came up with a plan to lay the foundation for upcoming shows. We wanted to focus on developing a workflow and pipeline to create one balanced and synchronized experience.

Normally, all the different elements within a show (audio, light, visual, performance) focus on their own area. There is one general theme or concept and everything then comes together in the end - during rehearsals.

We really wanted to create a show where we could focus on the total picture. Develop a workflow where we could keep refining the show and push the concept in all different elements in a quick and effective way, without overlooking the details.

What was the main goal you set out to achieve as you planned the Outer Edges show?

How long did it take to come together, from start to end?

We wanted to create a show where everything is 100% synchronized and highly adaptable. Having one main control computer which connects to all elements within the show in a perfect synchronized way.This setup gave us the ability to find a perfect balance and narrative between sound, performance, lights and visuals. Besides that we wanted to have a modular and highly flexible show. Making it easy and quick to adapt or add new content.

We started with the project in March 2016 and our premiere was at the Let It Roll festival in Prague (July 2016).

The show is designed in such a way that it has an “open-end”. We record every show and because of the open infrastructure we are constantly refining it on all fronts.

What are the different gadgets and software you use to achieve that perfect sync between audio/video & lighting?

Roy:Back in the day, my graduation project at the HKU was a vj mix tool where I used the concept of “cue based” triggering. Instead of the widely used timecode synchronization where you premix all the content (the lights and the video tracks), we send a MIDI trigger of every beat and sound effect.This saves a lot of time in the content creation production process.

The edit and mix down of the visuals are basically done live on stage instead of on After effects. This means we don't have to render out 8 minute video clips and can focus on only a couple of key visual loops per track. (Every track consists of about 5 clips which get triggered directly from Ableton Live using a custom midi track).Inside Ableton we group a couple of extra designated clips so they all get triggered at the same time.

For every audio clip we sequence separate midi clips for the video and lighting, which get played perfectly in sync with the audio. These midi tracks then get sent to the VJ laptop and Manuel's lighting desk.

We understand you trigger clips off Resolume from Abelton Live using the extremely handy Max for Live patches?

Yes, we sequence a separate midi track for each audio track. We divided up the audio track in 5 different elements (beats, snares, melody , fx etc.), which corresponds with 5 video layers in Resolume.

When a note gets triggered, a Max for Live patch translates it to an OSC message and sends if off to the VJ laptop. The OSC messages get caught by a small tool we built in Derivative’s TouchDesigner. In its essence this tool translates the incoming messages into OSC messages which Resolume understands. Basically, operating Resolume automatically with the triggers received from Ableton.

This way of triggering videoclips was a result of an experiment from Martin Boverhof and Sander Haakman during a performance at an art festival in Germany, a couple of years ago. Only two variables are being used- triggering video files and adjusting the opacity of a clip. We were amazed how powerful these two variables are.

Regarding lighting, we understand the newer Chamsys boards have inbuilt support for MIDI/ timecode. What desk do you use?

Manuel:To drive the lighting in the Noisia - Outer Edges show I use a Chamsys Lighting desk. It is a very open environment. You can send Midi, Midi showcontrol, OSC, Timecode LTC & MTC, UDP, Serial Data and off course DMX & Artnet to the desk.

The support of Chamsys is extremely good and the software version is 100% free. Compared to other lighting desk manufacturers, the hardware is much cheaper.

A lighting desk is still much more expensive than a midi controller.

It might look similar as both have faders and buttons but the difference is that a lighting desk has a brain.

You can store, recall and sequence states, something which is invaluable for a lighting designer and now is happening is videoland more and more.

I have been researching on bridging the gap between Ableton Live and ChamSys for 8 years.

This research has led me to M2Q, acronym of Music-to-Cue which acts as a bridge between Ableton live and ChamSys. M2Q is a hardware solution designed together with Lorenzo Fattori, an Italian lighting designer and engineer. M2Q listens to midi messages sent from Ableton Live and converts them to Chamsys Remote Control messages, providing cue triggering and playback intensity control.

M2Q is reliable, easy and fast lighting sync solution. It enables non linear lighting sync.

When using Timecode it is impossible to loop within a song, do the chorus one more time or alter the playback speed on the fly. One is basically limited to pressing play.

Because our lighting sync system is midi based the artist on stage has exactly the same freedom Ableton audio playback offers.

Do you link it to Resolume?

Chamsys has a personality file (head file) for Resolume and this enables driving Resolume as a media server from the lighting desk. I must confess that I’m am considering switching to Resolume now for some time as it is very cost effective and stable solution compared to other mediaserver platforms.

Video by Diana Gheorghiu

Tell us about the trio’s super cool headgear. They change color, strobe, are dimmable. How?!

The led suits are custom designed and built by Élodie Laurent and are basically 3 generic led parcans and have similar functionality.

They are connected to the lighting desk just as the rest of the lighting rig and are driven using the same system.

Fun fact: These are the only three lights we bring with us so the Outer Edges show is extremely tour-able.

The Noisia content is great in it’s quirkyness. Sometimes we see regular video clips, sometimes distorted human faces, sometimes exploding planets, mechanical animals- what’s the thought process behind the content you create? Is it track specific?

The main concept behind this show is that every track has his own little world in this Outer Edges universe. Every track stands on its own and has a different focus on style and narrative.

Nik (one third of Noisia & Art director) compiled a team of 10 international motion graphic artists and together we took on the visualization of the initial 34 audio tracks. Cover artwork, videoclips and general themes from the audio tracks formed the base for most of the tracks.

Photo by Diana Gheorghiu

Photo by Diana Gheorghiu

The lighting & video sync is so on point, we can’t stop talking about it. It must have taken hours of studio time & programming?

That was the whole idea behind the setup.

Instead of synchronizing everything in the light and video tracks, we separated the synchronizing process from the design process. Meaning that we sequence everything in Ableton and on the content side Resolume takes care of the rest. Updating a vj clip is just a matter of dragging a new clip into Resolume.

This also resulted in Resolume being a big part in the design process (instead of normally only serving as a media server).

During the design process we run the Ableton set and see how clips get triggered, if we don't like something we can easily replace the video clip with a new one or adjust for instance the scaling size inside Resolume.

Some tracks which included 3D rendered images took a bit longer, but there is one track “Diplodocus” which took 30 minutes to make from start to finish. Originally, meant as a placeholder but after seeing it being synchronized we liked the simplicity and boldness of it and decided to keep it in the show.

Here is some more madness that went down:

Video by Diana Gheorghiu

Is it challenging to adapt your concept show into different, extremely diverse festival setups? How do you output the video to LED setups that are not standard?

We mostly work with our rider setup consisting of a big LED screen in the back and LED banner in front of the booth, but in case of bigger festivals we can easily adjust the mapping setup inside Resolume.

In the case of Rampage we had another challenge to come up with a solution to operate with 7 full HD outputs.

Photo by Diana Gheorghiu

Normally Nik is controlling everything from stage and we have a direct video line to the LED processor. Since all the connections to the LED screens were located in the front of house we used 2 laptops positioned there.

It was easy to adjust the Ableton Max for Live patch to send the triggers to two computers instead of one, and we wrote a small extra tool that sends all the midi-controller data from the stage to the FOH (to make sure Nik was still able to operate everything from the stage).

Talk to us about some features of Resolume that you think are handy, and would advice people out there to explore.

Resolume was a big part of the design process in this show. Using it almost as a small little After Effects, we stacked up effects until we reached our preferred end result. We triggered scalings, rotations, effects and opacity using the full OSC control option Resolume offers. This makes it super easy to create spot on synchronized shows. With a minimal amount of pre - production.

This in combination with the really powerful mapping options makes it an ideal tool to build our shows on!

What a great interview, Roy & Manuel.

Thanks for giving us a behind-the-scenes understanding of what it takes to run this epic show, day after day.

Noisia has been ruling the Drum & Bass circuit, for a reason. Thumping, fresh & original music along with a remarkable show- what else do we want?

Here is one last video for a group rage :

Video by Diana Gheorghiu

Rinseout.

Credits:

Photo credits Noisa setup: Roy Gerritsen

Adhiraj, Refractor for the on point video edits.

Resolume Blog

This blog is about Resolume, VJ-ing and the inspiring things the Resolume users make. Do you have something interesting to show the community? Send in your work!

Highlights

Gorgeous AV Production by Bob White

Occasionally, a certain project will catch your eye. When you start watching and listening, you find yourself drifting away for a few minutes. The acoustic and the optic will blend together to one beautiful harmony.

Such a project is Intermittently Intertwined by Bob White. Take a moment out of your day, and watch this in HD with some good speakers.

[youtubeshort]http://youtu.be/acqIfU-UsaI[/youtubeshort]

We were even more amazed when we found out that the video above is actually created in realtime. It's not often someone is equally talented at music, motion design as well as coding. So we had to find out more about the project.

[fold][/fold]

Bob explains:

Check out more of Bob's work at http://www.bobwhitemedia.com

Such a project is Intermittently Intertwined by Bob White. Take a moment out of your day, and watch this in HD with some good speakers.

[youtubeshort]http://youtu.be/acqIfU-UsaI[/youtubeshort]

We were even more amazed when we found out that the video above is actually created in realtime. It's not often someone is equally talented at music, motion design as well as coding. So we had to find out more about the project.

[fold][/fold]

Bob explains:

The basic rig is:

Ableton > Network Midi > ofApp > Syphon > Resolume > Avermedia GameHD

In Maya I make a series of quick geometric loft animations and export these as alembic files (.abc). Alembic files are point cache animation files that are typically used to transfer dynamic simulations from say Houdini to Maya. Because I wanted the geometric element to "grow", alembic is a good vehicle as I'm animating vertices.

The brilliant ofxalembic addon loads alembic files as meshes in OpenFrameworks. Because my geometry is fairly low poly count, I can run a lot of them at the same time triggered by Midi notes. Surprisingly even with a lot of polys, playback of abc files is very fast.

My ofApp is very much a work in progress and the code is sketchy at best. The app has ~25 slots for abc files per scene. Additionally, I setup 6 scenes that can be switched with OSC messages. Each abc file can be triggered by an incoming Midi note and there are controls to randomize the playing of single abc files (single actions) or triggering sections of an abc file in sequence (sequence of actions).

I recorded a song I wrote in Ableton and setup a number of Network Midi tracks to send data to my ofApp. I then paint notes that drive the animation. The network midi sends trigger data directly to ofApp. Additionally I send minimal OSC messages to change scenes in my app.

I love the Resolume M4L devices and I would use them, but because I've finalised the song mix in the session view I used the showsync.info M4L devices to convert midi notes to OSC. The OSC changes scenes, clears the screen, and moves the camera. Alternatively, if I were working in the session view, perhaps looping or performing live, I would use the Resolume M4L on clip launch to send OSC.

The ofApp has Syphon enabled so I can display it in Resolume. I have some effects on it like Mirror and Edge Detection to sweeten it up. Like I said, next i would love to explore Ableton control more of the params in Resolume. I would also love to make put this whole app in a FFGL plugin that way I wouldn't have to rely on Syphon. So much to learn :)

Alone the animation is kinda static if it were not for the mirror effect. It's refreshing to be able to easily change the character of the animation using Resolume in real time. Having the ability to "paint" the animation, see the layered effects in real time, I think allows for a more immediate process. For me it's important to be able to work fast so not to get caught up in minutia.

Check out more of Bob's work at http://www.bobwhitemedia.com

The Ultimate Resolume/Ableton AV Setup, Including How-To

Everyone loves presents, and BirdMask by ASZYK/Neal Coghlan is a gift that keeps on giving. First watch the video below. Do it now.

From Neal's description:

After enjoying the lovely abstract animation, tight beat sync, lush colours and subtle perspective work, you find there's more. Not one to keep his secrets hidden, Neal shares a how to video of how this set of AV magic is put together. Revealing tricks of the trade, there is magic in this breakdown baby.

Check out more of Neal's work, and then head back to your own studio, and hang your head in shame for not being as good as this guy. I want to have his babies.

From Neal's description:

It's taken a while but finally, here is the first upload of BIRDMASK Visuals. I first started work on these way back at the end of 2010. Like my Tasty Visuals, they started out with some miscellaneous illustration. The elements came together quite nicely and I started to form compositions out of them which became geometric, tribal bird faces. Like with most of my illustrations, I couldn't resist bringing it alive by animating it.

In their earliest form there was a lot less clips and they weren't in HD. A lot of elements didn't fit together well either so mixing between faces wasn't as smooth. In it's current form, the set is made up of 6 different faces, each one with 8 layers and 4 different clips per layer - making a total of 192 clips. These are all loaded into Resolume and triggered using Ableton and an iPad.This video was made by recording a Resolume composition - all the clips being triggered live. This piece wasn't composited using FCP or Premiere! (or After Effects for that matter), it was all done live.

BIRDMASK visuals made their debut in Geneva at Mapping Festival in May 2011 (mappingfestival.ch/2011/types/?artist=1285&lang=en) and since then have been played in clubs across London. The biggest showing was during Channel 4's House Party (channel4.com/programmes/house-party/articles/aszyk) where we performed alongside RnB legends 'Soul II Soul', who played an amazing set, mixing Reggae, House, RnB and Garage.

The set is still evolving and work has begun on a 3D version. I'm hoping to port them into UNITY 3D where I can make them even more reactive...

The track is called 'Elephant & Castle' by ASZYK - soundcloud.com/aszyk/elephant-castle-v1

After enjoying the lovely abstract animation, tight beat sync, lush colours and subtle perspective work, you find there's more. Not one to keep his secrets hidden, Neal shares a how to video of how this set of AV magic is put together. Revealing tricks of the trade, there is magic in this breakdown baby.

Check out more of Neal's work, and then head back to your own studio, and hang your head in shame for not being as good as this guy. I want to have his babies.

Max for Live Resolume Patches

Checkout this new set of Max for Live patches that allow you to control Resolume from Ableton Live without requiring any tedious MIDI mapping. You can trigger clips and control parameters so once it's all set up all you have to do is concentrate on your music and effects in Live and Resolume will just follow.

Clip Launcher

This is a simple way to connect clips in Live to clips in Resolume. Simply drop it on a track in Live, and it will trigger clips in Resolume when you trigger clips in Live automatically. It corresponds exactly, so clip 1 in track 1 in Live connects to clip 1 in layer 1 in Resolume, clip 2 to clip 2 etc.

Parameter Forwarder

Dropping this guy behind an effect in an effect chain, will allow you to forward parameter information to Resolume. You can choose which parameter you want to forward from a dropdown, and send it to any parameter in Resolume by entering the OSC address.

Resolume Dispatcher

The Dispatcher connects the Clip Launcher and Parameter Forwarder to Resolume. You always need to have an active Dispatcher in order to communicate between Live and Resolume (but you canʼt have more than one).

Download

Download v2.2, compatible with Resolume 6.

Download v2.3, compatible with Resolume 7.

Download v1.10, compatible with Resolume 5 and earlier.

Instructions PDF

Version 1.8 and up now also send BPM values and Start/Stop messages...

Thanks to Mattijs Kneppers from Arttech.nl for creating these handy patches!

Trigger Resolume Clips With Max for Live

Getting tired of endless MIDI mapping to trigger clips in Resolume with Ableton Live? Checkout these Max for Live patches. These patches translate MIDI notes played in Live to OSC messages for Resolume. This means you do not have to do any complicated & cumbersome MIDI mappings to trigger clips in Resolume.

Download ResolumeM4L_1.zip

We hope you like it too! Let us know. A million thanks go out to Pandelis Diamantides for creating these patches for us. Checkout his music on Facebook.

Download ResolumeM4L_1.zip

We hope you like it too! Let us know. A million thanks go out to Pandelis Diamantides for creating these patches for us. Checkout his music on Facebook.